Optimization is a discipline which uses goals and constraints to identify the mathematically best decision to make.

Optimization models

The Meta-Problem Method helps you decide which problem to solve.

How optimization provides a foundation for better problem solving

-

1

-

2

Once the consequences of decisions are fully described with a model, we can explore exactly how changes to our model would change the decisions we make and their consequences.

-

3

Measuring your decisions against outcomes is the best way to understand what you really want.

Note to readers:

If you are not already familiar with math models like linear programming, you might want to refer to the Meta-Problem Method page instead. One core idea from the methodology that you need is the idea of incomplete versus complete problems. Read more about the definition here.

Deterministic problems

Definitions

p is a completely defined problem, which means all solutions to p have the same value.

b is an incompletely defined problem (a problem space), which means because of undefined parts of the problem (goals and / or constraints), we need to make assumptions to arrive at a solvable completely defined problem. Different assumptions may lead to solutions that produce a different value to stakeholders.

e is the effort it takes to solve a given problem with a particular method. Different methods to solve the same problem can take different levels of effort.

Optimization model

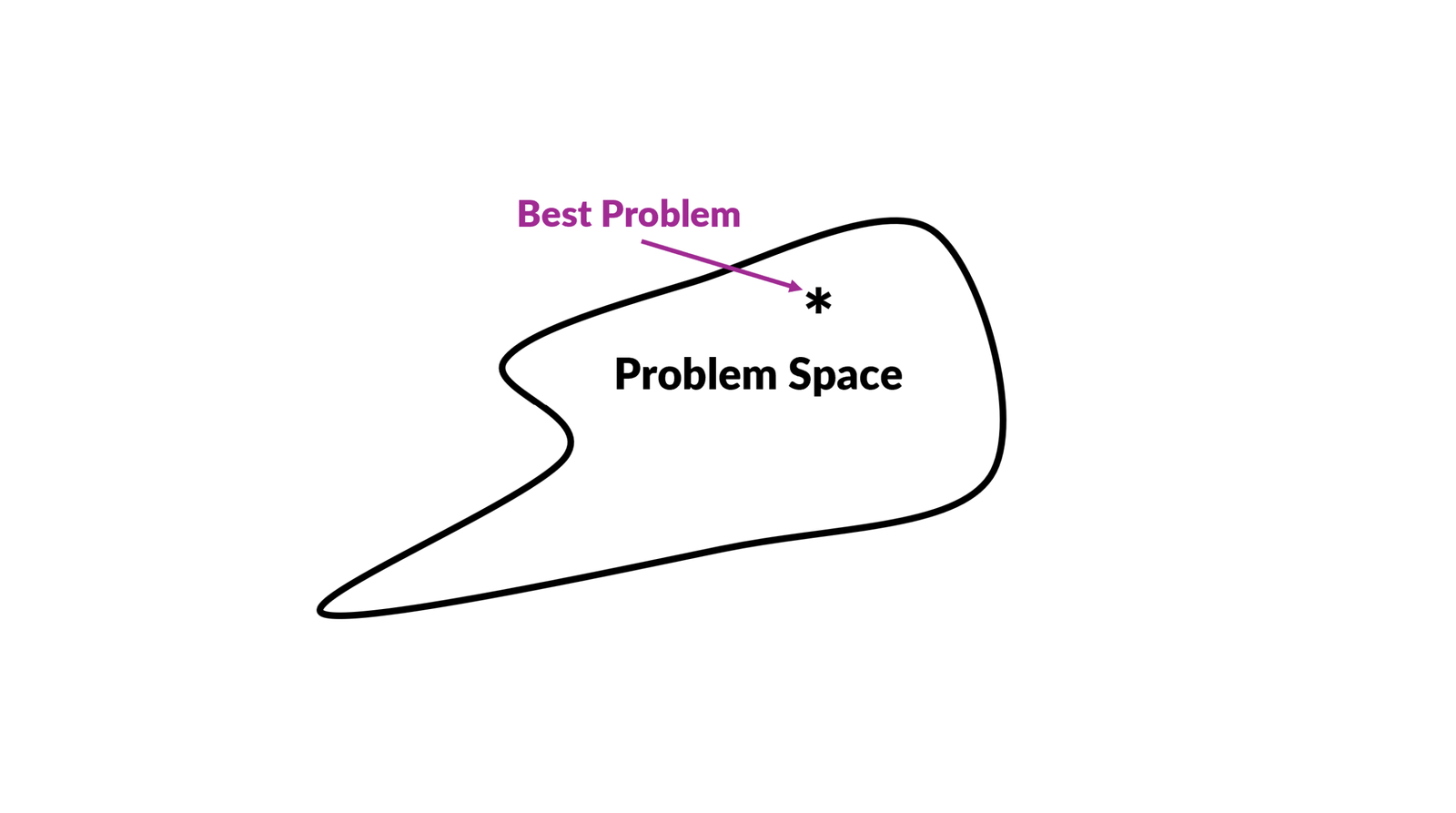

The deterministic optimization problem when given an incompletely defined problem b is the choice of which completely defined problem p you select from the problem-space.

The objective function is to maximize the multi-dimensional value of solving the chosen problem p less the multi-dimensional effort of solving it.

The solution will then be the best choice of problem to solve, given the options.

Insights

1. Lower effort can offset higher value. For example, even if it is less accurate, writing your problem in a way that is easier to solve may be worth it.

2. Solving one problem might not make it easier to solve some other related problem. This is true even if they’re both in the problem space of p. For example, a minimum viable product may have major gaps compared to one you would develop for the long term.

3. Your choice of problem p from the problem-space, might entail different solving methods. A linear program can be solved with simplex while an integer program might use branch-and-bound.

4. If we have settled on a completely defined problem p in the problem-space of b, solving the meta-problem means choosing the lowest-effort method to solve it.

5. If the effort to solve a problem is higher than the value, the best choice is to do nothing.

6. Given an incompletely defined problem b, there are a set of possible optimal solutions that correspond to different choices of assumptions within the problem-space.

Practical usage

When applying the Meta-Problem Method, we don’t typically create an actual optimization math program over problem space.

Instead, we use it as a framework to explore the neighborhood in problem-space of an initial completely defined problem p. Our goal is to identify any problems in problem-space which are better than our starting point because they are feasible and have a better value for the objective function.

Following the Meta-Problem Method enables us to develop a list of criteria for an alternative problem that would be better to solve. This could include higher profit, benefits received sooner, more ethical solutions, less environmental impact, lower effort to solve, and any other criteria we might consider including in the objective function or constraints of the meta-problem.

This mindset means can also evaluate the implied solution-space instead of only focusing on the problem-space.

Stochastic problems

Adapting for problems with uncertainty

Formally applying the Meta-Problem Method given a stochastic problem is tricky as we have the same complexity as in the deterministic case, but now we must consider the unknown as well.

Since we solve the optimization model before solving the actual problem, we may realize some uncertainty outside the scope of our analysis. However, we may also realize some uncertainty as we are identifying which problem to solve.

Because of this shift, our focus for stochastic problems is to identify a series of subproblems which will converge to the optimal problem to solve.

The objective function will be to maximize the sum of the multi-dimensional value of solving the chosen problems over the series, less the sum of the multi-dimensional effort of solving them.

Practical usage

When applying the Meta-Problem Method, the stochastic formulation explicitly accounts for the discovery process that happens as we explore the problem and solution spaces.

As an example, we often don’t learn all our criteria for a decision problem until we explore the problem-space. Said another way, it’s when you present a recommendation that decision makers often highlight other criteria that they care about, and the proposed solution does not satisfy, because they were late-arriving requirements.

Knowing that there is uncertainty which you can resolve depending on which problem you solve in the series, you can be strategic about which problems you solve early on.

Sometimes our biggest uncertainty has to do with the effort it will take to solve a problem. This insight explains project management methodologies like Agile, which ask the team to re-assess at regular intervals whether the problem is “solved.”

Check out the other pages on the science of the method

Want to get better at solving problems?

Stay updated.